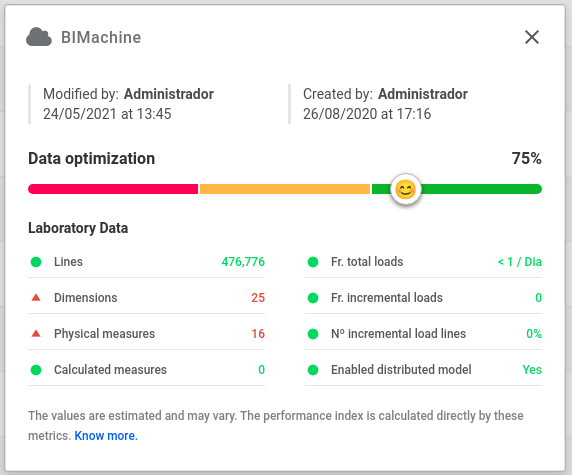

An optimized analytical or data structure is one that meets the business needs of the end user with speed in delivering numbers, practicality in assembling views and agility in data updates. Here you will see how BIMachine performs the optimization index ranking by the 8 criteria shown in the image above.

Optimization Index Criteria

All criteria follow a classification pattern of three ranges: Bad, Regular and Good. Each criterion has characteristics that define the index within each range, and are detailed below.

Line Criteria

This criterion is intended to control the total size of the structure, it sorts based on the total number of data records that are loaded. A structure with many lines indicates that your data is not being handled properly and will likely slow down the update loads, and the delivery of indicators and insights to end users.

The optimization index considers the following parameters for classification:

- More than 1 million lines: BAD

- Between 500 thousand and 1 million lines: REGULAR

- Less than 500 thousand lines: GOOD

If your structure has this BAD index, there are some methods to optimize it, such as:

- Filters and data selection criteria: validate that you are loading the data that is really needed. In many cases we always have a range of data period that we want to analyze, so validate with the business area that consumes the information which period should really be made available

- Grouping of records: check if there is the possibility of summarizing your data, grouping them according to the level of detail required for the analysis

- Breakdown of structures: in some cases it is worth segmenting your structure, creating new structures that contain more detailed information for specific analyses, and leaving the main structure with a lower level of detail, grouping records to reduce the number of lines, and so get faster views.

- Level of detail: the level or granularity of its structure is very important, it defines the level of detail of the information that will be made available to the user. Normally, in BI, information is analyzed at higher and managerial levels, enabling the identification of clues and insights to the business user that are sufficient for decision making. The best thing for granularity in a structure is generally not to descend the detail to the last possible level, such as the number of an invoice, order or financial document

Dimensions Criteria

The number of dimensions within a structure indicates the optimization of its business modeling. A well-modeled analytical structure usually has a small number of dimensions, which should allow the business user to detail the information necessary for decision making.

The optimization index considers the following parameters for classification:

- More than 20 dimensions: BAD

- Between 12 and 20 dimensions: REGULAR

- Less than 12 dimensions: GOOD

If your structure has a BAD index of dimensions, we have some tips to optimize it:

- Concatenation of fields: it is common for information to be taken in different formats, but which have the same purpose in a structure, for example, the separation of Customers into Code and Company Name. In these cases, it is recommended that only one dimension be created that concatenates these two data, thus reducing the total number of dimensions

- Duplicate Dimensions: Another common occurrence is the duplication of dimensions in a structure, make sure you have dimensions that are unique, and that really allow the user to drill down into the data.

- Unusable dimensions: validate if all dimensions that are in your structure really have a function for the business scenario, it is common to add fields without knowing if they will be used and they are forgotten. This only gets in the way of business users, slows down data loads, opens of BI indicators and views

- Structure crossing: when you have a structure that crosses data from two or more structures, such as Billing X Goals, make sure that the dimensions considered in the intersection exist in all structures involved, as it makes no sense to bring one information that only exists in billing for example

Physical Measurement Criteria

The number of physical measurements in a structure is relevant to the business modeling performed. Many measures indicate that the structure was perhaps not well thought out, and may end up hindering the user when it comes to generating their views and business insights, polluting with too many options and generating confusion about which numbers to present are correct.

The optimization index considers the following parameters for classification:

- More than 15 measures: BAD

- Between 10 and 15 measures: REGULAR

- Less than 10 measures: GOOD

If your structure has a BAD index of physical measurements, we have some tips to optimize it:

- Calculations performed: check if you can do the necessary calculations to get your indicators already in the data extraction, addition and subtraction operations can usually be done in the extraction, instead of taking each of the variables to BIMachine and then creating calculated measures

- Unused measures: make sure that all measures you are taking to the structure will actually be used, too many measures hinder the business user in creating their views

- Structure crossing: when you have a structure that crosses data from two or more structures, such as Billing X Targets, make sure that the measures considered in the crossing make sense in the comparison, for example, it is not worth bringing values from billing taxes if there is no comparison target for it

Calculated Measures Criteria

The measures calculated in a structure are fundamental for us to obtain accurate indicators and identify points of improvement in the business scenario.

The optimization index considers the following parameters for classification:

- More than 15 measures: BAD

- Between 10 and 15 measures: REGULAR

- Less than 10 measures: GOOD

If your structure has a BAD index of calculated measures, we have some tips to optimize it:

- Calculations performed: check if you can do the necessary calculations to get your indicators already in the data extraction, addition and subtraction operations can usually be done in the extraction, instead of taking each of the variables to BIMachine and then creating calculated measures

- Unused measures: make sure that all measures you are taking to the structure will actually be used, too many measures hinder the business user in creating their views

- Structure crossover: when you have a structure that crosses data from two or more structures, such as Billing X Targets, make sure that the measures considered in the crossing make sense in the comparison, for example, it is not worth bringing values from billing taxes if there is no comparison target for it

Total Load Frequency Criteria

The full load is intended to refresh all the data in your BI structure, and should only be used when this is really necessary, in order to avoid redundant traffic and information processing. The frequency of total loads on a structure is a very important factor in your BI design, many total loads performed will affect the performance of the business views as a whole, and especially the performance of your data source. For example, if you are looking for data from your ERP, there may be slowdowns for operational users in their daily lives. Ideally, in a data refresh scenario, you should have a maximum full load per day.

The optimization index considers the following parameters for classification:

- More than 1 full load per day: BAD

- 1 full load per day: REGULAR

- Less than 1 full load per day: GOOD

If your structure has a BAD index of frequency of total loads, we have some tips to optimize it:

- Update periods: make sure you really need to update your data, most of the information needs updates that look for data from the last few days, for example your company’s Billing, it’s information that you’ll hardly need to update more than the invoices issued within 30 days, as they no longer undergo adjustments or cancellations

- Incremental loads: an incremental load will erase a certain period of data from its base in BI, and update it with new or changed data, so following the concept covered in the previous item, transform your total loads into incrementals, looking for the period of data that actually underwent changes that should be considered in BI

- Frequency of total loads: if it is really necessary to carry out total loads, try to reduce their frequency, do it at most once a day, however, when possible schedule your loads every 2 days, weekly or even monthly

Incremental Load Frequency Criteria

An incremental load is intended to update a period of data in your structure. It will erase a certain period of data already uploaded to BI, and upload new and updated data as per your extraction configuration. The frequency of incremental updates is an important performance factor for both your analytic views and your data source, where very frequent loads can slow down your ERP system, for example, when they are not well articulated.

The optimization index considers the following parameters for classification:

- More than 15 incremental loads per day: BAD

- Between 10 and 15 incremental loads per day: REGULAR

- Less than 10 incremental loads per day: GOOD

If your structure has a BAD incremental load frequency index, we have some tips to optimize it:

- Update frequency: Many business users report having a need to update data in near real-time, they need to have the data always reflect the most recent moment. However this is not always real, check if it will be possible for the user to see the differences in the indicators with each update, in many cases a synchronization of the updates with the times when the user will be able to check them solves this problem, and so you does not keep running data updates for nothing, which only burden your BI and therefore your data source system

Criteria for Number of lines in incremental loads

The number of records returned in your incremental load is an important indicator of the efficiency of your updates. Many rows being returned with each update indicate that the given time period may be too long, and it is possible to shorten it, resulting in a faster and more efficient update.

The optimization index considers the following parameters for classification:

- More than 30% of total structure lines: BAD

- Between 10% and 30% of the total lines of the structure: REGULAR

- Less than 10% of total structure lines: GOOD

If your structure has a BAD index of number of rows in incremental loads, we have some tips to optimize it:

- Update period: validate if the incremental load update period is in accordance with the real need, it is common to want to ensure that if a slightly older data has been changed, it is corrected in the BI base, however this can be done on separate loads, so it’s better to have more incremental load schedules that look for different time periods than just one that tries to update all the data every time. For example, you can schedule an incremental load every 1 hour that always updates the records of the previous and current day, and at night do an incremental load that updates the 30-day records, thus decreasing the total number of records trafficked in the day.

Distributed Model Criteria

The distributed model is a small configuration at the time of creating your structure that makes a lot of difference in the performance of your visions. It is strongly recommended that you enable the distributed model, as it will ensure greater speed in opening your views and indicators. What this configuration does is a better structure of your data in the BI base, this is not visible to the user, but it is very important for the multidimensional analytical processor.

The optimization index considers the following parameters for classification:

- Distributed model disabled: BAD

- Distributed model enabled: GOOD